Misleading claims about Covid-19 vaccines are not being fact-checked on Facebook, a new study finds. Further analysis highlights inconsistencies in the application of Facebook's policies.

A study by the Institute for Strategic Dialogue (ISD), found that the number of Irish Facebook groups and pages hosting and disseminating vaccine disinformation doubled in six months. By February this year, there were more than 130,800 users in these groups.

Facebook initiated multiple initiatives since the vaccine rollout commenced in the state in December. It updated its Covid-19 policy to remove false claims about “the safety, efficacy, ingredients or side effects of the vaccines.” This policy was expanded in February and in March Facebook launched the Together Against Covid-19 Misinformation campaign in partnership with the World Health Organisation. As part of Facebook's efforts to “aggressively" tackle misinformation, this campaign aimed to give people “additional resources to scrutinize content they see online, helping them to decide what to read, trust and share.”

ISD study

The ISD, a London-based think tank, analysed Irish Facebook content throughout January. It monitored more than 40 groups and pages, which hosted more than 20,900 posts including photos, links, status updates, and videos. These posts generated a total of 467,000 interactions (likes, comments and shares). This is an 82 per cent increase in the level of interactions compared to six months prior.

There are glaring inconsistencies in the content Facebook fact checks. This is evident in the vast number of false claims about vaccine ingredients, vaccine related deaths, and conspiracy theories.

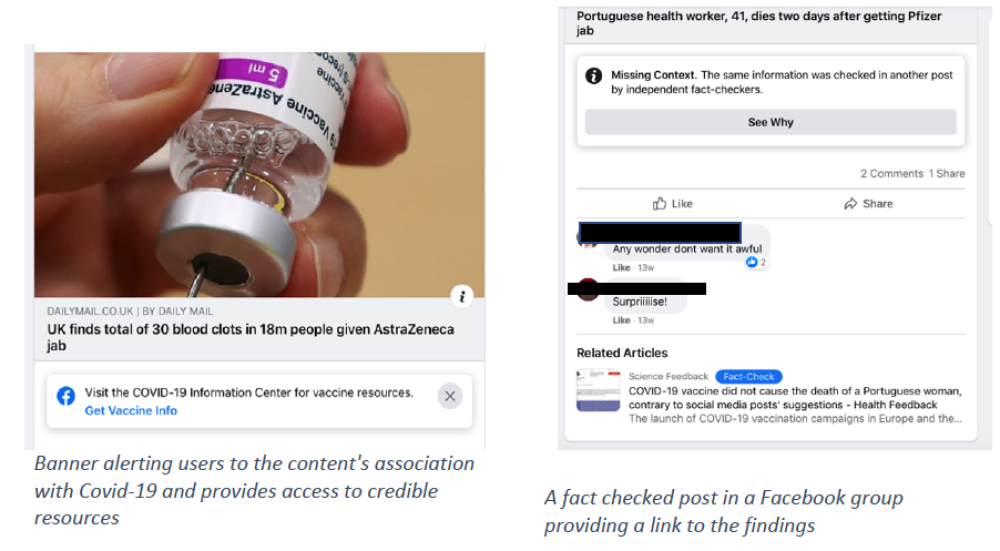

In some instances, these unsubstantiated claims are accompanied by a fact check from Health Feedback, an official fact checking partner of Facebook. These indicate that the information is inaccurate or missing context and include a link to reliable information.

Other posts indicate that the information relates to Covid-19 and links to authorised medical websites on Facebook’s Covid-19 Information Centre. In Ireland’s case, users can access information from the HSE and the WHO.

Fact checking inconsistencies

Further analysis of the ISD data finds that the exact same posts and content are fact checked in some instances, but not in others.

In the first week of January, there were 138 posts in the Facebook

groups and 33 posts in Facebook pages. Groups

allow members to post and interact freely within the group while pages have

designated admins who post and users can only like, share or comment on the

posts. Of the 33 posts in Facebook pages, none were fact checked or

flagged as hosting content relating to the vaccine. Yet, there were instances

when the exact same posts appeared in Facebook groups with fact checks.

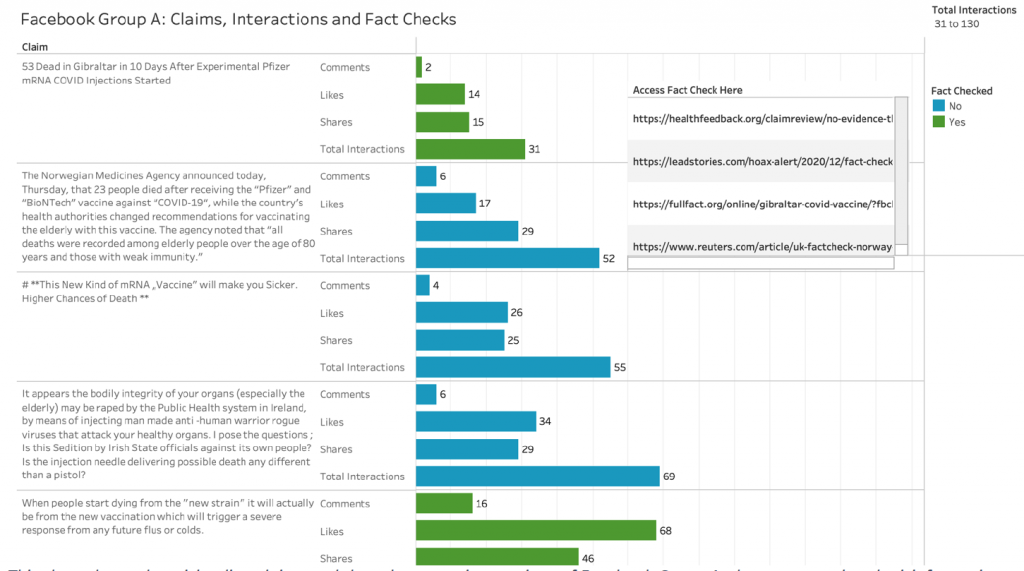

In a review of five posts picked at random within the most populated misinformation Facebook group - which currently has over 19,000 members – two posts have been fact checked, while the remaining three have not.

In the above chart, the last three claims were posted alongside videos of Professor Dolores Cahill, a faculty member at the University College of Dublin’s School of Medicine and former chair of the Irish Freedom Party. In these videos, Cahill links the vaccine to processes known as “cytokine storm” and antibody dependent enhancement (ADE).

A cytokine storm is a phenomenon in which the immune system goes into overdrive. False claims linking mRNA vaccines to a cytokine storm have been debunked by Reuters. ADE occurs when antibodies enhance a virus’ ability to infect cells. “Although ADE has been observed in humans who received the dengue vaccine, as well as in individuals that received a vaccine candidate for the respiratory syncytial virus, the evidence from COVID-19 vaccine clinical trials so far have not shown more severe disease occurring in vaccinated participants,” a fact check by Poynter stated.

In the Facebook group, only one of the three posts containing Cahill's claims was fact checked. That fact checked was re-posted almost two weeks later by a different user and no fact check was applied.

The remaining two claims refer to apparent deaths caused by the Pfizer-BioNTech vaccine. The first claims that 53 people died in Gibraltar after receiving the vaccine. This was later debunked by Full Fact and Facebook indicated this on the post.

The second claim linked the death of 23 people to the vaccine. This was not flagged as misleading even though Reuter’s fact checking team concluded that the claim was missing context. The Norwegian Institute of Public Health investigated 13 of the 23 reported deaths and, as of 18th January, had not concluded that the vaccines were to blame.

Facebook's affordances

The affordances of Facebook allow users to disseminate false claims by posting videos, links, photos and articles. BitChute - a video platform associated with extremist material and minimal content moderation - was heavily utilised to share videos of Cahill. Yet, only some appeared with fact checks. The chart shows that these videos amassed a total of 100 shares, meaning it had a far broader reach than the confines of the group.

Another evident trend was the echo chamber effect Facebook enables. The same users were members of multiple groups and posted the same claims and links across all the groups while also sharing misleading information in the comments sections of other users' posts.

The comments section were often the key site for mis/disinformation. In many instances, users shared vaccine related articles from reputable news outlets. The corresponding comment sections for these posts acted as an unpoliced landscape for harmful agendas.

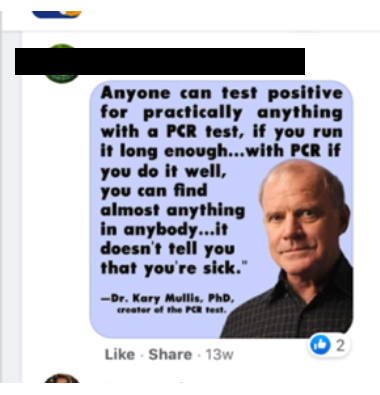

Some comments included false claims that were misattributed to medical professors as in the example below. Other comments claimed to have uncovered "confidential" documentation to prove disinformation claims.

The impact of vaccine misinformation spreads far behind the confines of Facebook groups and online communities. Some of these groups also host and organise anti-lockdown protests showing how online vitriol can lead to offline danger.

Currently, the European Union is reviewing its Code of Practice on Disinformation and will develop a new set of standards for platforms to counteract disinformation.

Trudy Feenane is a journalism student at DCU School of Communications.